#include <boosting.h>

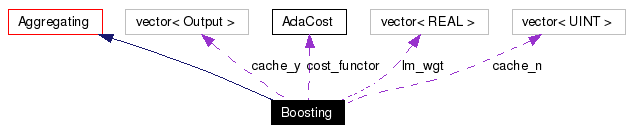

Inheritance diagram for Boosting:

| REAL | cost () const |

| virtual void | train_gd () |

| Training using gradient-descent. | |

| pDataWgt | sample_weight () const |

| Sample weights for new hypothesis. | |

| struct | _boost_gd |

Public Member Functions | |

| Boosting (bool cvx=false, const cost::Cost &=cost::_cost) | |

| Boosting (const Aggregating &) | |

| Boosting (std::istream &is) | |

| virtual const id_t & | id () const |

| virtual Boosting * | create () const |

| Create a new object using the default constructor. | |

| virtual Boosting * | clone () const |

| Create a new object by replicating itself. | |

| bool | is_convex () const |

| REAL | model_weight (UINT n) const |

| void | use_gradient_descent (bool gd=true) |

| void | set_min_cost (REAL mincst) |

| void | set_min_error (REAL minerr) |

| virtual REAL | margin_norm () const |

| The normalization term for margins. | |

| virtual REAL | margin_of (const Input &, const Output &) const |

| Report the (unnormalized) margin of an example (x, y). | |

| virtual REAL | margin (UINT) const |

| Report the (unnormalized) margin of the example i. | |

| virtual bool | support_weighted_data () const |

| Whether the learning model/algorithm supports unequally weighted data. | |

| virtual void | train () |

| Train with preset data set and sample weight. | |

| virtual void | reset () |

| virtual Output | operator() (const Input &) const |

| virtual Output | get_output (UINT) const |

| Get the output of the hypothesis on the idx-th input. | |

| virtual void | set_train_data (const pDataSet &, const pDataWgt &=0) |

| Set the data set and sample weight to be used in training. | |

Public Attributes | |

| const cost::Cost & | cost_functor |

Calculate  and its derivative. and its derivative. | |

Protected Member Functions | |

| void | clear_cache (UINT idx) const |

| void | clear_cache () const |

| REAL | model_weight_sum () const |

| virtual bool | serialize (std::ostream &, ver_list &) const |

| virtual bool | unserialize (std::istream &, ver_list &, const id_t &=NIL_ID) |

| pLearnModel | train_with_smpwgt (const pDataWgt &) const |

| REAL | assign_weight (const DataWgt &sw, const LearnModel &l) |

| Assign weight to a newly generated hypothesis. | |

| pDataWgt | update_smpwgt (const DataWgt &sw, const LearnModel &l) |

| Update sample weights after adding the new hypothesis. | |

| virtual REAL | convex_weight (const DataWgt &, const LearnModel &) |

| virtual REAL | linear_weight (const DataWgt &, const LearnModel &) |

| virtual void | convex_smpwgt (DataWgt &) |

| virtual void | linear_smpwgt (DataWgt &) |

Protected Attributes | |

| std::vector< REAL > | lm_wgt |

| hypothesis weight | |

| bool | convex |

| convex or linear combination | |

| bool | grad_desc_view |

| Traditional way or gradient descent. | |

| REAL | min_cst |

| REAL | min_err |

Classes | |

| class | BoostWgt |

| Weight in gradient descent. More... | |

As one specific aggregating technique, boosting generates a linear (may be restricted to convex) combination of hypotheses by sequentially calling a weak learner (the base model) with varying sample weights.

For many problems, convex combination may result in a same super learning model as linear combination does. However, the training algorithms used in different combinations may differ. Whether to use convex or linear combinations is specified with the constructor (Boosting()). The default is linear combination.

Traditional boosting techniques (e.g., AdaBoost) carry out training in the following form to generate n hypotheses:

sign operation).(See the code of Boosting::train() for more details.) The function assign_weight() is used to calculate the weight for the hypothesis; update_smpwgt() is used to update the sample weights. Modifying these two functions properly is usually enough for designing a new boosting algorithm. (To be precise, functions to be modified are convex_weight(), convex_smpwgt(), and/or linear_weight(), linear_smpwgt().)

![\[ C(F) = \sum_i w_i \cdot c(F(x_i), y_i) \]](form_2.png)

is the sample average of some pointwise cost (cost_functor). A corresponding boosting algorithm decreases this cost functional by adding proper weak hypotheses to F.

The gradient, which is a function, is also defined on training samples:

![\[ \nabla C(F)(x_i) = w_i \cdot c'_F (F(x_i), y_i)\,. \]](form_3.png)

The next hypothesis should maximize the inner-product between it and  . Thus the sample weights used to training this hypothesis is

. Thus the sample weights used to training this hypothesis is

![\[ D_i \propto -\frac{w_i}{y_i} c'_F (F(x_i), y_i) \]](form_1.png)

where  is the partial derivative to the first argument (see cost_functor.deriv1()).

is the partial derivative to the first argument (see cost_functor.deriv1()).

Denote the newly generated hypothesis by g. The weight for g can be determined by doing a line search along  , where

, where  is a positive scalar. The weight also makes the updated vector of sample weights perpendicular to the vector of errors of g.

is a positive scalar. The weight also makes the updated vector of sample weights perpendicular to the vector of errors of g.

We use the line search template to implement the training of gradient descent view. Employing different cost_functor should be enough to get a different boosting algorithm. See also _boost_gd.

Definition at line 89 of file boosting.h.

|

||||||||||||

|

Definition at line 20 of file boosting.cpp. Referenced by Boosting::clone(), and Boosting::create(). |

|

|

Definition at line 25 of file boosting.cpp. |

|

|

Definition at line 104 of file boosting.h. |

|

||||||||||||

|

Assign weight to a newly generated hypothesis. We assume l is not but will be added.

Definition at line 163 of file boosting.h. References Boosting::convex, Boosting::convex_weight(), Boosting::linear_weight(), and LearnModel::n_samples. Referenced by Boosting::train(). |

|

|

Definition at line 138 of file boosting.h. References LearnModel::n_samples. Referenced by Boosting::get_output(), Boosting::reset(), CGBoost::set_aggregation_size(), and Boosting::set_train_data(). |

|

|

Definition at line 133 of file boosting.h. References LearnModel::n_samples. Referenced by _boost_gd::set_weight(). |

|

|

Create a new object by replicating itself.

return new Derived(*this);

Implements Aggregating. Reimplemented in AdaBoost, CGBoost, LPBoost, and MgnBoost. Definition at line 109 of file boosting.h. References Boosting::Boosting(). |

|

|

Definition at line 211 of file boosting.cpp. References OBJ_FUNC_UNDEFINED. Referenced by Boosting::update_smpwgt(). |

|

||||||||||||

|

Assign weight to a newly generated hypothesis. We assume l is not but will be added.

Reimplemented in AdaBoost. Definition at line 204 of file boosting.cpp. References OBJ_FUNC_UNDEFINED. Referenced by Boosting::assign_weight(). |

|

|

Definition at line 218 of file boosting.cpp. References _cost, Boosting::get_output(), LearnModel::n_samples, LearnModel::ptd, and LearnModel::ptw. Referenced by _boost_gd::cost(), and Boosting::train(). |

|

|

Create a new object using the default constructor. The code for a derived class Derived is always return new Derived(); Implements Aggregating. Reimplemented in AdaBoost, CGBoost, LPBoost, and MgnBoost. Definition at line 108 of file boosting.h. References Boosting::Boosting(). |

|

|

Get the output of the hypothesis on the idx-th input.

Reimplemented from LearnModel. Definition at line 104 of file boosting.cpp. References LearnModel::_n_out, Boosting::clear_cache(), LearnModel::exact_dimensions(), Aggregating::lm, Boosting::lm_wgt, Aggregating::n_in_agg, LearnModel::ptd, LearnModel::ptw, and LearnModel::train_data(). Referenced by Boosting::cost(), CGBoost::linear_smpwgt(), Boosting::margin(), and Boosting::sample_weight(). |

|

|

Implements Object. Reimplemented in AdaBoost, CGBoost, LPBoost, and MgnBoost. Referenced by Boosting::train_with_smpwgt(). |

|

|

Definition at line 111 of file boosting.h. References Boosting::convex. |

|

|

Reimplemented in AdaBoost, and CGBoost. Definition at line 214 of file boosting.cpp. References OBJ_FUNC_UNDEFINED. Referenced by Boosting::update_smpwgt(). |

|

||||||||||||

|

Reimplemented in AdaBoost, and CGBoost. Definition at line 207 of file boosting.cpp. References OBJ_FUNC_UNDEFINED. Referenced by Boosting::assign_weight(). |

|

|

Report the (unnormalized) margin of the example i.

Reimplemented from LearnModel. Definition at line 76 of file boosting.cpp. References Boosting::get_output(), INFINITESIMAL, and LearnModel::ptd. |

|

|

The normalization term for margins. The margin concept can be normalized or unnormalized. For example, for a perceptron model, the unnormalized margin would be the wegithed sum of the input features, and the normalized margin would be the distance to the hyperplane, and the normalization term is the norm of the hyperplane weight. Since the normalization term is usually a constant, it would be more efficient if it is precomputed instead of being calculated every time when a margin is asked for. The best way is to use a cache. Here I use a easier way: let the users decide when to compute the normalization term. Reimplemented from LearnModel. Definition at line 67 of file boosting.cpp. References Boosting::convex, and Boosting::model_weight_sum(). |

|

||||||||||||

|

Report the (unnormalized) margin of an example (x, y).

Reimplemented from LearnModel. Definition at line 71 of file boosting.cpp. References INFINITESIMAL. |

|

|

Definition at line 112 of file boosting.h. References Boosting::lm_wgt. |

|

|

Definition at line 146 of file boosting.h. References Boosting::lm_wgt, and Aggregating::n_in_agg. Referenced by Boosting::margin_norm(). |

|

|

Implements LearnModel. Definition at line 82 of file boosting.cpp. References LearnModel::_n_out, LearnModel::exact_dimensions(), Aggregating::lm, Boosting::lm_wgt, and Aggregating::n_in_agg. |

|

|

Delete learning models stored in lm. This is only used in operator= and load().

Reimplemented from Aggregating. Reimplemented in CGBoost. Definition at line 59 of file boosting.cpp. References Boosting::clear_cache(), Boosting::lm_wgt, and Aggregating::reset(). Referenced by CGBoost::reset(). |

|

|

Sample weights for new hypothesis. Compute weight (probability) vector according to

Definition at line 232 of file boosting.cpp. References _cost_deriv, Boosting::get_output(), Aggregating::n_in_agg, LearnModel::n_samples, LearnModel::ptd, and LearnModel::ptw. Referenced by _boost_gd::gradient(), and Boosting::train(). |

|

||||||||||||

|

Reimplemented from Aggregating. Reimplemented in CGBoost. Definition at line 31 of file boosting.cpp. References Boosting::convex, Aggregating::lm, Boosting::lm_wgt, and SERIALIZE_PARENT. |

|

|

Definition at line 114 of file boosting.h. References Boosting::min_cst. |

|

|

Definition at line 115 of file boosting.h. References Boosting::min_err. |

|

||||||||||||

|

Set the data set and sample weight to be used in training.

If the learning model/algorithm can only do training using uniform sample weight, i.e., support_weighted_data() returns

In order to make the life easier, when support_weighted_data() returns

Reimplemented from Aggregating. Definition at line 144 of file boosting.cpp. References Boosting::clear_cache(), LearnModel::ptd, and Aggregating::set_train_data(). |

|

|

Whether the learning model/algorithm supports unequally weighted data.

Reimplemented from LearnModel. Definition at line 120 of file boosting.h. |

|

|

Train with preset data set and sample weight.

Implements LearnModel. Reimplemented in AdaBoost, CGBoost, LPBoost, and MgnBoost. Definition at line 151 of file boosting.cpp. References Boosting::assign_weight(), Boosting::cost(), Boosting::grad_desc_view, Aggregating::lm, Aggregating::lm_base, Boosting::lm_wgt, Aggregating::max_n_model, Boosting::min_cst, Boosting::min_err, Aggregating::n_in_agg, LearnModel::ptd, LearnModel::ptw, Boosting::sample_weight(), LearnModel::set_dimensions(), Aggregating::size(), LearnModel::train_c_error(), Boosting::train_gd(), Boosting::train_with_smpwgt(), and Boosting::update_smpwgt(). Referenced by MgnBoost::train(), CGBoost::train(), and AdaBoost::train(). |

|

|

Training using gradient-descent.

Reimplemented in CGBoost, and MgnBoost. Definition at line 180 of file boosting.cpp. References Boosting::convex, and lemga::iterative_optimize(). Referenced by Boosting::train(). |

|

|

Definition at line 186 of file boosting.cpp. References Boosting::convex, Boosting::id(), Aggregating::lm_base, Aggregating::max_n_model, Aggregating::n_in_agg, LearnModel::ptd, and LearnModel::ptw. Referenced by _boost_gd::gradient(), and Boosting::train(). |

|

||||||||||||||||

|

Reimplemented from Aggregating. Reimplemented in CGBoost. Definition at line 40 of file boosting.cpp. References Boosting::convex, Aggregating::lm, Boosting::lm_wgt, Object::NIL_ID, and UNSERIALIZE_PARENT. |

|

||||||||||||

|

Update sample weights after adding the new hypothesis. We assume l has just been added to the aggregation.

Definition at line 173 of file boosting.h. References Boosting::convex, Boosting::convex_smpwgt(), Boosting::linear_smpwgt(), Aggregating::lm, Aggregating::n_in_agg, and LearnModel::n_samples. Referenced by Boosting::train(). |

|

|

Definition at line 113 of file boosting.h. References Boosting::grad_desc_view. Referenced by MgnBoost::MgnBoost(), and CGBoost::unserialize(). |

|

|

Definition at line 194 of file boosting.h. |

|

|

convex or linear combination

Definition at line 92 of file boosting.h. Referenced by Boosting::assign_weight(), Boosting::is_convex(), LPBoost::LPBoost(), Boosting::margin_norm(), Boosting::serialize(), MgnBoost::train(), MgnBoost::train_gd(), CGBoost::train_gd(), Boosting::train_gd(), Boosting::train_with_smpwgt(), Boosting::unserialize(), and Boosting::update_smpwgt(). |

|

|

Calculate

Definition at line 100 of file boosting.h. |

|

|

Traditional way or gradient descent.

Definition at line 95 of file boosting.h. Referenced by CGBoost::serialize(), CGBoost::set_aggregation_size(), MgnBoost::train(), LPBoost::train(), CGBoost::train(), Boosting::train(), and Boosting::use_gradient_descent(). |

|

|

hypothesis weight

Definition at line 91 of file boosting.h. Referenced by CGBoost::CGBoost(), Boosting::get_output(), CGBoost::linear_smpwgt(), AdaBoost::linear_smpwgt(), Boosting::model_weight(), Boosting::model_weight_sum(), Boosting::operator()(), Boosting::reset(), Boosting::serialize(), CGBoost::set_aggregation_size(), _boost_cg::set_weight(), _boost_gd::set_weight(), Boosting::train(), CGBoost::unserialize(), Boosting::unserialize(), and _boost_gd::weight(). |

|

|

Definition at line 96 of file boosting.h. Referenced by Boosting::set_min_cost(), and Boosting::train(). |

|

|

Definition at line 96 of file boosting.h. Referenced by Boosting::set_min_error(), and Boosting::train(). |

1.4.6

1.4.6